Make fragments#

The first step of the scene reconstruction system is to create fragments from short RGBD sequences.

Input arguments#

The script runs with python run_system.py [config] --make. In [config],

["path_dataset"] should have subfolders image and depth to store the

color images and depth images respectively. We assume the color images and the

depth images are synchronized and registered. In [config], the optional

argument ["path_intrinsic"] specifies the path to a json file that stores

the camera intrinsic matrix (See

Read camera intrinsic for

details). If it is not given, the PrimeSense factory setting is used instead.

Register RGBD image pairs#

30def register_one_rgbd_pair(s, t, color_files, depth_files, intrinsic,

31 with_opencv, config):

32 source_rgbd_image = read_rgbd_image(color_files[s], depth_files[s], True,

33 config)

34 target_rgbd_image = read_rgbd_image(color_files[t], depth_files[t], True,

35 config)

36

37 option = o3d.pipelines.odometry.OdometryOption()

38 option.depth_diff_max = config["depth_diff_max"]

39 if abs(s - t) != 1:

40 if with_opencv:

41 success_5pt, odo_init = pose_estimation(source_rgbd_image,

42 target_rgbd_image,

43 intrinsic, False)

44 if success_5pt:

45 [success, trans, info

46 ] = o3d.pipelines.odometry.compute_rgbd_odometry(

47 source_rgbd_image, target_rgbd_image, intrinsic, odo_init,

48 o3d.pipelines.odometry.RGBDOdometryJacobianFromHybridTerm(),

49 option)

50 return [success, trans, info]

51 return [False, np.identity(4), np.identity(6)]

52 else:

53 odo_init = np.identity(4)

54 [success, trans, info] = o3d.pipelines.odometry.compute_rgbd_odometry(

55 source_rgbd_image, target_rgbd_image, intrinsic, odo_init,

56 o3d.pipelines.odometry.RGBDOdometryJacobianFromHybridTerm(), option)

57 return [success, trans, info]

The function reads a pair of RGBD images and registers the source_rgbd_image

to the target_rgbd_image. The Open3D function compute_rgbd_odometry is

called to align the RGBD images. For adjacent RGBD images, an identity matrix is

used as the initialization. For non-adjacent RGBD images, wide baseline matching

is used as the initialization. In particular, the function pose_estimation

computes OpenCV ORB feature to match sparse features over wide baseline images,

then performs 5-point RANSAC to estimate a rough alignment, which is used as

the initialization of compute_rgbd_odometry.

Multiway registration#

60def make_posegraph_for_fragment(path_dataset, sid, eid, color_files,

61 depth_files, fragment_id, n_fragments,

62 intrinsic, with_opencv, config):

63 o3d.utility.set_verbosity_level(o3d.utility.VerbosityLevel.Error)

64 pose_graph = o3d.pipelines.registration.PoseGraph()

65 trans_odometry = np.identity(4)

66 pose_graph.nodes.append(

67 o3d.pipelines.registration.PoseGraphNode(trans_odometry))

68 for s in range(sid, eid):

69 for t in range(s + 1, eid):

70 # odometry

71 if t == s + 1:

72 print(

73 "Fragment %03d / %03d :: RGBD matching between frame : %d and %d"

74 % (fragment_id, n_fragments - 1, s, t))

75 [success, trans,

76 info] = register_one_rgbd_pair(s, t, color_files, depth_files,

77 intrinsic, with_opencv, config)

78 trans_odometry = np.dot(trans, trans_odometry)

79 trans_odometry_inv = np.linalg.inv(trans_odometry)

80 pose_graph.nodes.append(

81 o3d.pipelines.registration.PoseGraphNode(

82 trans_odometry_inv))

83 pose_graph.edges.append(

84 o3d.pipelines.registration.PoseGraphEdge(s - sid,

85 t - sid,

86 trans,

87 info,

88 uncertain=False))

89

90 # keyframe loop closure

91 if s % config['n_keyframes_per_n_frame'] == 0 \

92 and t % config['n_keyframes_per_n_frame'] == 0:

93 print(

94 "Fragment %03d / %03d :: RGBD matching between frame : %d and %d"

95 % (fragment_id, n_fragments - 1, s, t))

96 [success, trans,

97 info] = register_one_rgbd_pair(s, t, color_files, depth_files,

98 intrinsic, with_opencv, config)

99 if success:

100 pose_graph.edges.append(

101 o3d.pipelines.registration.PoseGraphEdge(

102 s - sid, t - sid, trans, info, uncertain=True))

103 o3d.io.write_pose_graph(

104 join(path_dataset, config["template_fragment_posegraph"] % fragment_id),

105 pose_graph)

This script uses the technique demonstrated in

Multiway registration. The function

make_posegraph_for_fragment builds a pose graph for multiway registration of

all RGBD images in this sequence. Each graph node represents an RGBD image and

its pose which transforms the geometry to the global fragment space.

For efficiency, only key frames are used.

Once a pose graph is created, multiway registration is performed by calling the

function optimize_posegraph_for_fragment.

34def optimize_posegraph_for_fragment(path_dataset, fragment_id, config):

35 pose_graph_name = join(path_dataset,

36 config["template_fragment_posegraph"] % fragment_id)

37 pose_graph_optimized_name = join(

38 path_dataset,

39 config["template_fragment_posegraph_optimized"] % fragment_id)

40 run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name,

41 max_correspondence_distance = config["depth_diff_max"],

42 preference_loop_closure = \

43 config["preference_loop_closure_odometry"])

This function calls global_optimization to estimate poses of the RGBD images.

Make a fragment#

108def integrate_rgb_frames_for_fragment(color_files, depth_files, fragment_id,

109 n_fragments, pose_graph_name, intrinsic,

110 config):

111 pose_graph = o3d.io.read_pose_graph(pose_graph_name)

112 volume = o3d.pipelines.integration.ScalableTSDFVolume(

113 voxel_length=config["tsdf_cubic_size"] / 512.0,

114 sdf_trunc=0.04,

115 color_type=o3d.pipelines.integration.TSDFVolumeColorType.RGB8)

116 for i in range(len(pose_graph.nodes)):

117 i_abs = fragment_id * config['n_frames_per_fragment'] + i

118 print(

119 "Fragment %03d / %03d :: integrate rgbd frame %d (%d of %d)." %

120 (fragment_id, n_fragments - 1, i_abs, i + 1, len(pose_graph.nodes)))

121 rgbd = read_rgbd_image(color_files[i_abs], depth_files[i_abs], False,

122 config)

123 pose = pose_graph.nodes[i].pose

124 volume.integrate(rgbd, intrinsic, np.linalg.inv(pose))

125 mesh = volume.extract_triangle_mesh()

126 mesh.compute_vertex_normals()

127 return mesh

Once the poses are estimated, RGBD integration is used to reconstruct a colored fragment from each RGBD sequence.

Batch processing#

149def process_single_fragment(fragment_id, color_files, depth_files, n_files,

150 n_fragments, config):

151 if config["path_intrinsic"]:

152 intrinsic = o3d.io.read_pinhole_camera_intrinsic(

153 config["path_intrinsic"])

154 else:

155 intrinsic = o3d.camera.PinholeCameraIntrinsic(

156 o3d.camera.PinholeCameraIntrinsicParameters.PrimeSenseDefault)

157 sid = fragment_id * config['n_frames_per_fragment']

158 eid = min(sid + config['n_frames_per_fragment'], n_files)

159

160 make_posegraph_for_fragment(config["path_dataset"], sid, eid, color_files,

161 depth_files, fragment_id, n_fragments,

162 intrinsic, with_opencv, config)

163 optimize_posegraph_for_fragment(config["path_dataset"], fragment_id, config)

164 make_pointcloud_for_fragment(config["path_dataset"], color_files,

165 depth_files, fragment_id, n_fragments,

166 intrinsic, config)

167

168

169def run(config):

170

171 print("making fragments from RGBD sequence.")

172 make_clean_folder(join(config["path_dataset"], config["folder_fragment"]))

173

174 [color_files, depth_files] = get_rgbd_file_lists(config["path_dataset"])

175 n_files = len(color_files)

176 n_fragments = int(

177 math.ceil(float(n_files) / config['n_frames_per_fragment']))

178

179 if config["python_multi_threading"] is True:

180 max_workers = min(max(1, multiprocessing.cpu_count() - 1), n_fragments)

181 # Prevent over allocation of open mp threads in child processes

182 os.environ['OMP_NUM_THREADS'] = '1'

183 mp_context = multiprocessing.get_context('spawn')

184 with mp_context.Pool(processes=max_workers) as pool:

185 args = [(fragment_id, color_files, depth_files, n_files,

186 n_fragments, config) for fragment_id in range(n_fragments)]

187 pool.starmap(process_single_fragment, args)

188 else:

189 for fragment_id in range(n_fragments):

190 process_single_fragment(fragment_id, color_files, depth_files,

191 n_files, n_fragments, config)

The process_single_fragment function calls each individual function explained above.

The run function determines the number of fragments to generate based on the number

of images in the dataset and the configuration value n_frames_per_fragment.

Subsequently, it invokes process_single_fragment for each of these fragments.

Furthermore, it leverages multiprocessing to speed up computation of all fragments.

Results#

Fragment 000 / 013 :: RGBD matching between frame : 0 and 1

Fragment 000 / 013 :: RGBD matching between frame : 0 and 5

Fragment 000 / 013 :: RGBD matching between frame : 0 and 10

Fragment 000 / 013 :: RGBD matching between frame : 0 and 15

Fragment 000 / 013 :: RGBD matching between frame : 0 and 20

:

Fragment 000 / 013 :: RGBD matching between frame : 95 and 96

Fragment 000 / 013 :: RGBD matching between frame : 96 and 97

Fragment 000 / 013 :: RGBD matching between frame : 97 and 98

Fragment 000 / 013 :: RGBD matching between frame : 98 and 99

The following is a log from optimize_posegraph_for_fragment.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 195 edges.

Line process weight : 389.309502

[Initial ] residual : 3.223357e+05, lambda : 1.771814e+02

[Iteration 00] residual : 1.721845e+04, valid edges : 157, time : 0.022 sec.

[Iteration 01] residual : 1.350251e+04, valid edges : 168, time : 0.017 sec.

:

[Iteration 32] residual : 9.779118e+03, valid edges : 179, time : 0.013 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.519 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 179 edges.

Line process weight : 398.292104

[Initial ] residual : 5.120047e+03, lambda : 2.565362e+02

[Iteration 00] residual : 5.064539e+03, valid edges : 179, time : 0.014 sec.

[Iteration 01] residual : 5.037665e+03, valid edges : 178, time : 0.015 sec.

:

[Iteration 11] residual : 5.017307e+03, valid edges : 177, time : 0.013 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.197 sec.

CompensateReferencePoseGraphNode : reference : 0

The following is a log from integrate_rgb_frames_for_fragment.

Fragment 000 / 013 :: integrate rgbd frame 0 (1 of 100).

Fragment 000 / 013 :: integrate rgbd frame 1 (2 of 100).

Fragment 000 / 013 :: integrate rgbd frame 2 (3 of 100).

:

Fragment 000 / 013 :: integrate rgbd frame 97 (98 of 100).

Fragment 000 / 013 :: integrate rgbd frame 98 (99 of 100).

Fragment 000 / 013 :: integrate rgbd frame 99 (100 of 100).

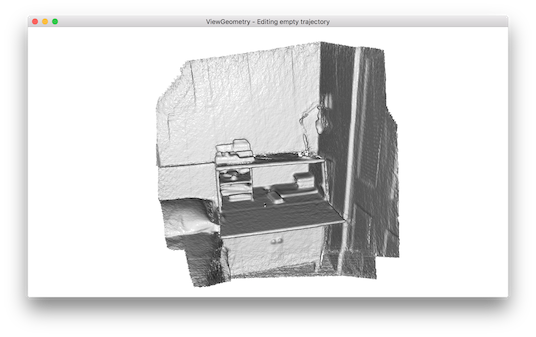

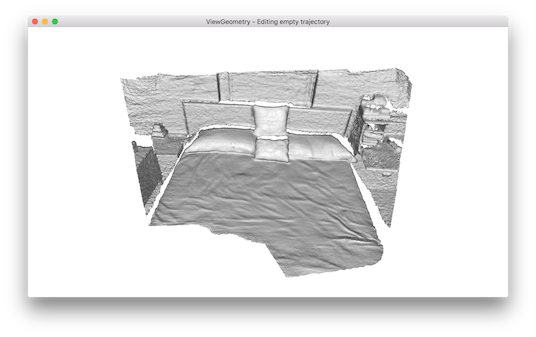

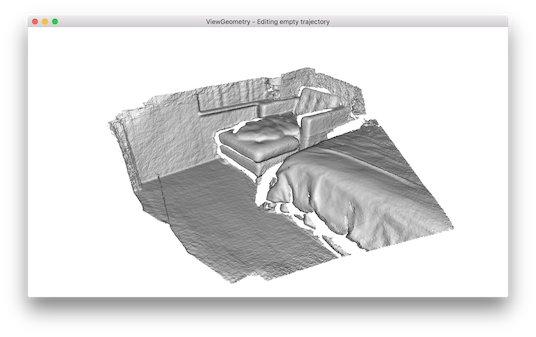

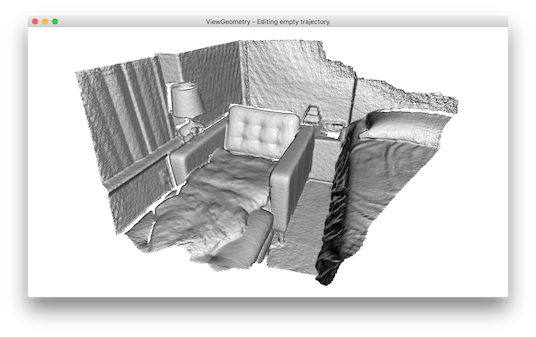

The following images show some of the fragments made by this script.