RGBD odometry¶

An RGBD odometry finds the camera movement between two consecutive RGBD image pairs. The input are two instances of RGBDImage. The output is the motion in the form of a rigid body transformation. Open3D has implemented two RGBD odometries: [Steinbrucker2011] and [Park2017].

5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | # examples/Python/Basic/rgbd_odometry.py

import open3d as o3d

import numpy as np

if __name__ == "__main__":

pinhole_camera_intrinsic = o3d.io.read_pinhole_camera_intrinsic(

"../../TestData/camera_primesense.json")

print(pinhole_camera_intrinsic.intrinsic_matrix)

source_color = o3d.io.read_image("../../TestData/RGBD/color/00000.jpg")

source_depth = o3d.io.read_image("../../TestData/RGBD/depth/00000.png")

target_color = o3d.io.read_image("../../TestData/RGBD/color/00001.jpg")

target_depth = o3d.io.read_image("../../TestData/RGBD/depth/00001.png")

source_rgbd_image = o3d.geometry.RGBDImage.create_from_color_and_depth(

source_color, source_depth)

target_rgbd_image = o3d.geometry.RGBDImage.create_from_color_and_depth(

target_color, target_depth)

target_pcd = o3d.geometry.PointCloud.create_from_rgbd_image(

target_rgbd_image, pinhole_camera_intrinsic)

option = o3d.odometry.OdometryOption()

odo_init = np.identity(4)

print(option)

[success_color_term, trans_color_term,

info] = o3d.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, pinhole_camera_intrinsic,

odo_init, o3d.odometry.RGBDOdometryJacobianFromColorTerm(), option)

[success_hybrid_term, trans_hybrid_term,

info] = o3d.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, pinhole_camera_intrinsic,

odo_init, o3d.odometry.RGBDOdometryJacobianFromHybridTerm(), option)

if success_color_term:

print("Using RGB-D Odometry")

print(trans_color_term)

source_pcd_color_term = o3d.geometry.PointCloud.create_from_rgbd_image(

source_rgbd_image, pinhole_camera_intrinsic)

source_pcd_color_term.transform(trans_color_term)

o3d.visualization.draw_geometries([target_pcd, source_pcd_color_term])

if success_hybrid_term:

print("Using Hybrid RGB-D Odometry")

print(trans_hybrid_term)

source_pcd_hybrid_term = o3d.geometry.PointCloud.create_from_rgbd_image(

source_rgbd_image, pinhole_camera_intrinsic)

source_pcd_hybrid_term.transform(trans_hybrid_term)

o3d.visualization.draw_geometries([target_pcd, source_pcd_hybrid_term])

|

Read camera intrinsic¶

We first read the camera intrinsic matrix from a json file.

11 12 13 | pinhole_camera_intrinsic = o3d.io.read_pinhole_camera_intrinsic(

"../../TestData/camera_primesense.json")

print(pinhole_camera_intrinsic.intrinsic_matrix)

|

This yields:

[[ 525. 0. 319.5]

[ 0. 525. 239.5]

[ 0. 0. 1. ]]

Note

Lots of small data structures in Open3D can be read from / written into json files. This includes camera intrinsics, camera trajectory, pose graph, etc.

Read RGBD image¶

15 16 17 18 19 20 21 22 23 24 | source_color = o3d.io.read_image("../../TestData/RGBD/color/00000.jpg")

source_depth = o3d.io.read_image("../../TestData/RGBD/depth/00000.png")

target_color = o3d.io.read_image("../../TestData/RGBD/color/00001.jpg")

target_depth = o3d.io.read_image("../../TestData/RGBD/depth/00001.png")

source_rgbd_image = o3d.geometry.RGBDImage.create_from_color_and_depth(

source_color, source_depth)

target_rgbd_image = o3d.geometry.RGBDImage.create_from_color_and_depth(

target_color, target_depth)

target_pcd = o3d.geometry.PointCloud.create_from_rgbd_image(

target_rgbd_image, pinhole_camera_intrinsic)

|

This code block reads two pairs of RGBD images in the Redwood format. We refer to Redwood dataset for a comprehensive explanation.

Note

Open3D assumes the color image and depth image are synchronized and registered in the same coordinate frame. This can usually be done by turning on both the synchronization and registration features in the RGBD camera settings.

Compute odometry from two RGBD image pairs¶

30 31 32 33 34 35 36 37 | [success_color_term, trans_color_term,

info] = o3d.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, pinhole_camera_intrinsic,

odo_init, o3d.odometry.RGBDOdometryJacobianFromColorTerm(), option)

[success_hybrid_term, trans_hybrid_term,

info] = o3d.odometry.compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image, pinhole_camera_intrinsic,

odo_init, o3d.odometry.RGBDOdometryJacobianFromHybridTerm(), option)

|

This code block calls two different RGBD odometry methods. The first one is [Steinbrucker2011]. It minimizes photo consistency of aligned images. The second one is [Park2017]. In addition to photo consistency, it implements constraint for geometry. Both functions run in similar speed. But [Park2017] is more accurate in our test on benchmark datasets. It is recommended.

Several parameters in OdometryOption():

minimum_correspondence_ratio: After alignment, measure the overlapping ratio of two RGBD images. If overlapping region of two RGBD image is smaller than specified ratio, the odometry module regards that this is a failure case.max_depth_diff: In depth image domain, if two aligned pixels have a depth difference less than specified value, they are considered as a correspondence. Larger value induce more aggressive search, but it is prone to unstable result.min_depthandmax_depth: Pixels that has smaller or larger than specified depth values are ignored.

Visualize RGBD image pairs¶

39 40 41 42 43 44 45 46 47 48 49 50 51 52 | if success_color_term:

print("Using RGB-D Odometry")

print(trans_color_term)

source_pcd_color_term = o3d.geometry.PointCloud.create_from_rgbd_image(

source_rgbd_image, pinhole_camera_intrinsic)

source_pcd_color_term.transform(trans_color_term)

o3d.visualization.draw_geometries([target_pcd, source_pcd_color_term])

if success_hybrid_term:

print("Using Hybrid RGB-D Odometry")

print(trans_hybrid_term)

source_pcd_hybrid_term = o3d.geometry.PointCloud.create_from_rgbd_image(

source_rgbd_image, pinhole_camera_intrinsic)

source_pcd_hybrid_term.transform(trans_hybrid_term)

o3d.visualization.draw_geometries([target_pcd, source_pcd_hybrid_term])

|

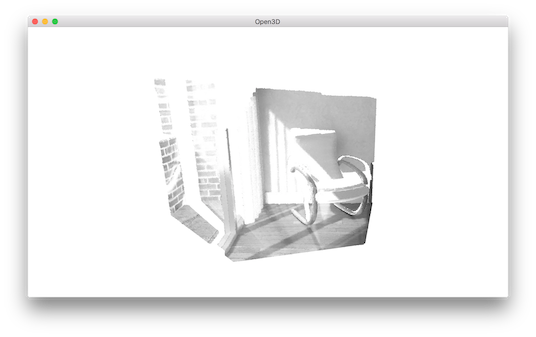

The RGBD image pairs are converted into point clouds and rendered together. Note that the point cloud representing the first (source) RGBD image is transformed with the transformation estimated by the odometry. After this transformation, both point clouds are aligned.

Outputs:

Using RGB-D Odometry

[[ 9.99985131e-01 -2.26255547e-04 -5.44848980e-03 -4.68289761e-04]

[ 1.48026964e-04 9.99896965e-01 -1.43539723e-02 2.88993731e-02]

[ 5.45117608e-03 1.43529524e-02 9.99882132e-01 7.82593526e-04]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

Using Hybrid RGB-D Odometry

[[ 9.99994666e-01 -1.00290715e-03 -3.10826763e-03 -3.75410348e-03]

[ 9.64492959e-04 9.99923448e-01 -1.23356675e-02 2.54977516e-02]

[ 3.12040122e-03 1.23326038e-02 9.99919082e-01 1.88139799e-03]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]